Experimenters often look at their experiment results and are unsure if there’s enough data to make a decision or what decision they should make. They’re often left asking: "Should I keep running my experiment?" and "Do my results meet my success criteria?”

The Experiment Decision Framework (EDF) solves this by automating both decisions. It’s a set of customizable settings that automatically determines when your experiment has collected sufficient data and recommends what to do based on your predefined business criteria.

How it works

Target Minimum Detectable Effects (MDEs): Set the smallest effect size worth detecting for each metric. If a 2% conversion improvement isn't meaningful for your business, set your target MDE to 5%. The framework only renders decisions when you have enough statistical precision to reliably detect your target effect.

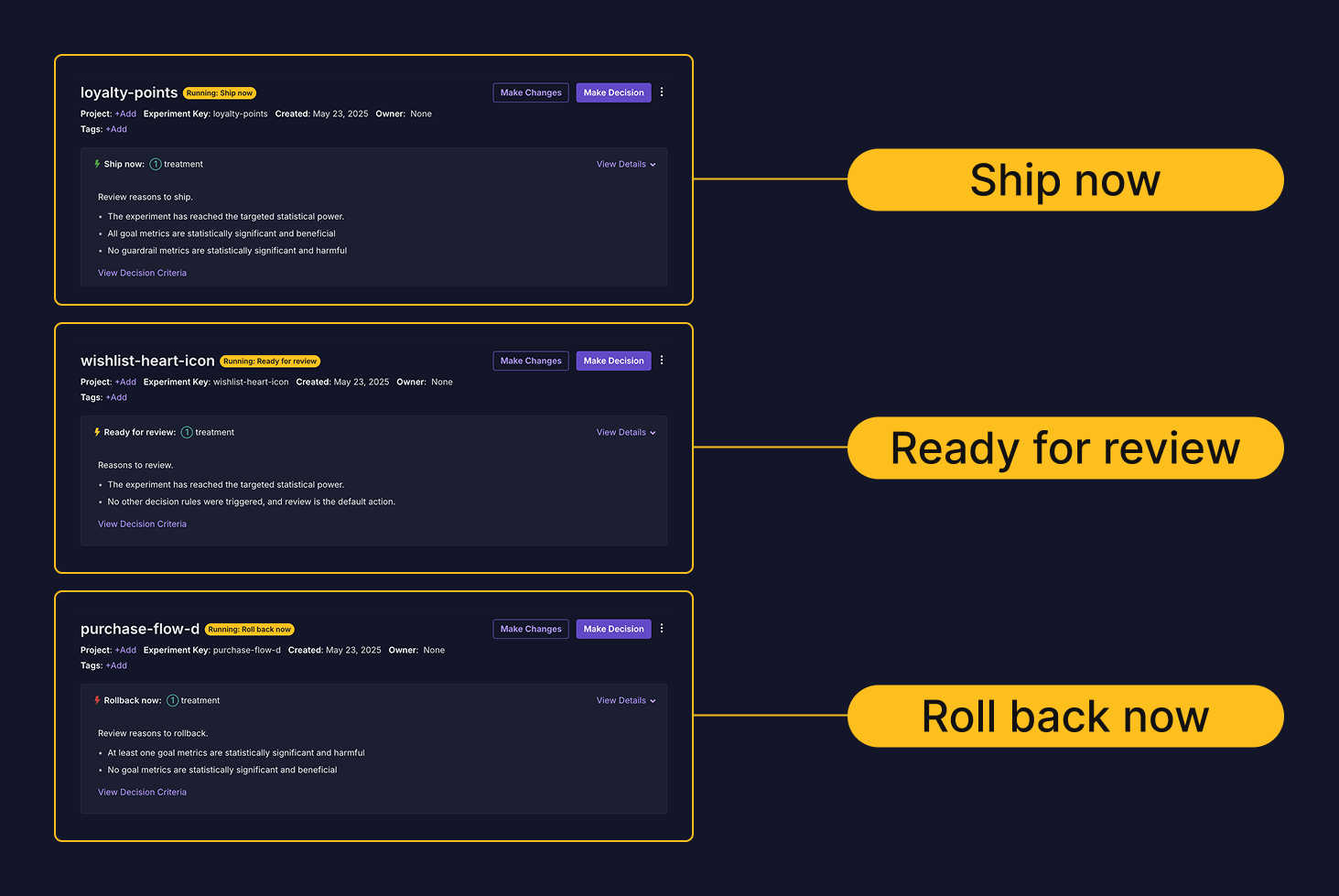

Decision Criteria: Define shipping logic before you see results. Examples:

- Ship if all goal metrics are statistically significant and positive

- Ship unless any guardrail metrics are statistically significant and negative

- Custom rules based on your business logic

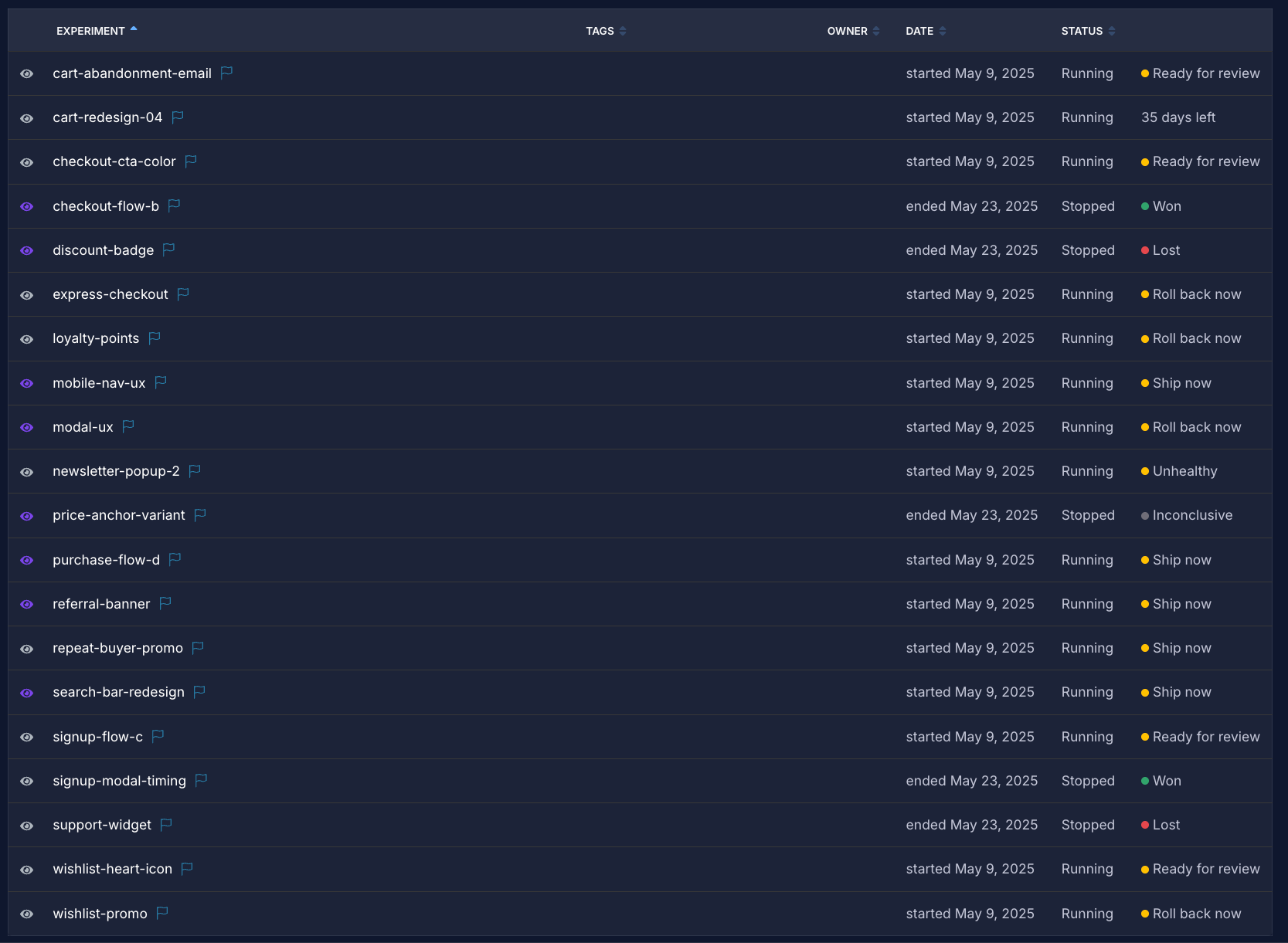

Automated Status Updates: Your experiment shows exactly where it stands:

- "~7 days left" - Need more data to reach target precision

- "Ship now" - Results meet your shipping criteria

- "Roll back now" - Results meet your rollback criteria

- "Ready for review" - Mixed results requiring human judgment

Real example

You're testing a checkout redesign with a 5% target MDE for conversion rate:

Day 10: Confidence interval of +3% to +11% for conversion rate Status: Ship now

Reasoning: Goal metric is statistically significant and beneficial under "Clear Signals" decision criteria

The framework determined you have sufficient precision (interval width corresponds to your 5% target MDE) and the result meets your predefined shipping criteria.

What this fixes

False positives: Teams that ship as soon as an experiment reaches statistical significance, instead of waiting for a certain sample size, suffer from the peeking problem which results in too many false positives.

Inconsistent shipping decisions: Without predefined criteria, teams make different decisions on similar results depending on business pressure or personal bias. Setting criteria upfront eliminates this inconsistency.

Low-powered experiments: Many teams run experiments that can't reliably detect the effects they care about. Target MDEs force you to think about what's actually worth measuring

Setup

Available in Settings → General → Experiment Settings for Pro and Enterprise customers. Set organization defaults for target MDEs and decision criteria, then customize per experiment on the Experiment Overview tab.

The framework works best with 1-2 goal metrics. More metrics dramatically increase the time needed to reach target precision across all metrics.

Implementation notes

The decision framework uses your existing experiment data and statistical engine. It doesn't change how you run experiments—it just adds structured decision-making on top.

You can override target MDEs per metric and switch decision criteria per experiment. The framework respects your minimum experiment runtime setting and won't show decisions during early data collection periods.

The EDF is available now. Questions about implementation or edge cases? Reach out—we'd like to hear how you're using it.