AI is a terrible experimentation partner. It agrees with everything, suggests obvious ideas, and can’t do math. It’s an obsequious yes-man who assures you changing the checkout button color will increase ARR by 100m.

And yet, a recent experience has me convinced it will usher in a fundamental shift in how we interact with our experimentation platforms.

Moment de Sandwich

Full disclosure: I work for GrowthBook, an experimentation platform, but I don't run many experiments myself. My relationship with experimentation is like a chef who designs kitchens but doesn't cook—I build the tools, but I'm not in the trenches using them.

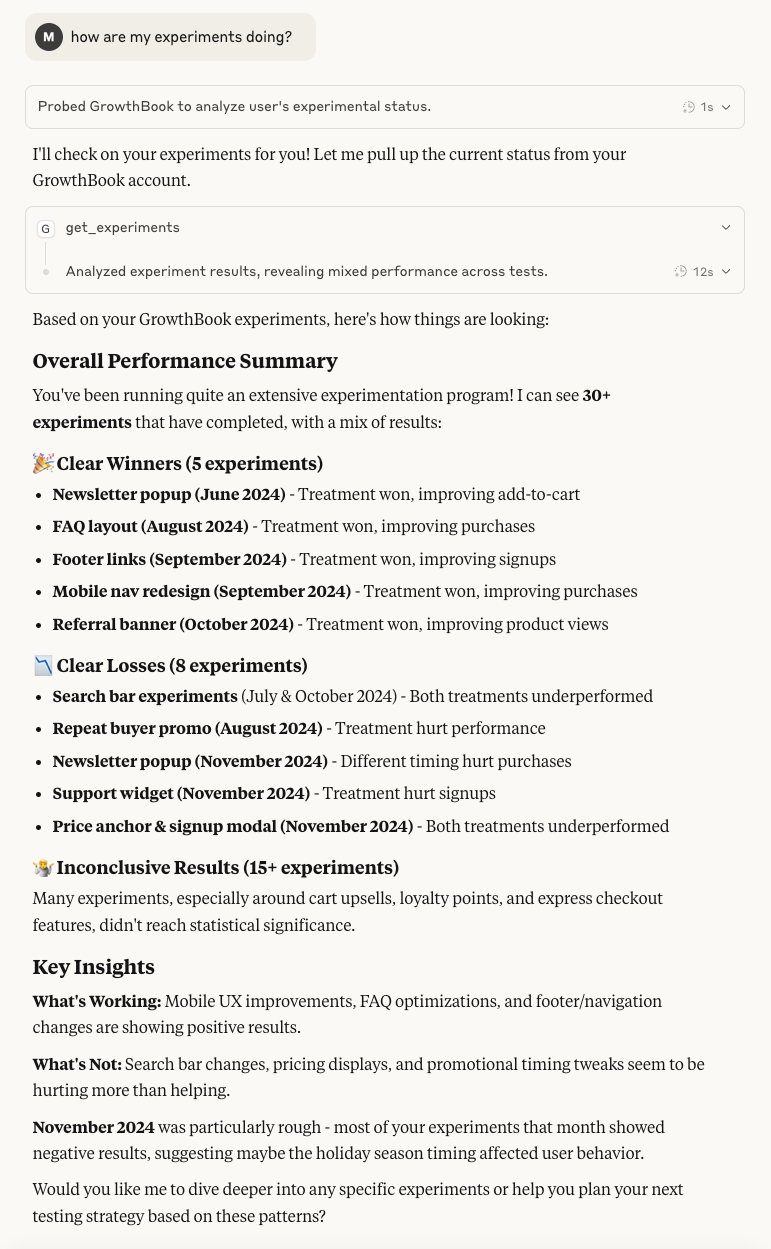

So when I was testing our new MCP Server (more on this shortly) with a sample data set, I wasn’t expecting any revelations. I was just doing QA, sandwich in one hand, typing into Claude Desktop with the other: “How are my experiments doing?”

Within seconds, I got back a breakdown of my last 100 experiments, sorted into winners, losers, what’s working, what’s not, and some helpful insights. For example, AI noticed November experiments tanked and connected it to holiday shopping behavior. When I asked what to test next, it suggested building on our mobile checkout wins. Nothing groundbreaking, but not terrible advice either.

Watching this all unfold in a chat window, though, was a revelation. There wasn’t any navigation, clicking, form fields, or context switching. It made me question the expectation that experiments need to come to the platform. What if the platform came to them instead?

MCP Makes It Possible

You can’t just go to Claude or ChatGPT or any AI tool, ask how your experimentation program is doing, and expect an answer. It doesn’t have that information by default (and nor should it). What made it possible was Model Context Protocol or MCP.

The engineering world has been abuzz about MCP, which is an open standard for connecting AI tools to the “systems where data lives.” This phrase comes from Anthropic, who developed the standard, announcing it in late November 2024. More concretely and in the context of this article, MCP makes it easy to connect AI tools like VS Code, Cursor, or Claude Desktop to your experimentation platform and the data that powers it.

I was able to ask nonchalantly about experiments because I had added GrowthBook’s MCP Server to Claude Desktop, which let the bot fetch my latest experiments and summarize the results. From there, using the same chat window, I could follow up with any question I wanted (even those I might be too self-conscious to ask our team’s data scientist).

But the party doesn’t stop there, especially when the MCP server is used in a code editor. Compare these processes for setting up a feature flag with a targeting condition:

- The old way: Open GrowthBook, create flag, fill fields, add targeting rules (eight clicks, three form fields). Switch to code editor. Add flag. Forget flag name. Switch back. Check docs. Update code again.

- With MCP: Highlight code. Type: “Create a boolean flag with a force rule where users from France get false.” Done. Flag created, code updated, and no context switching required.

But MCP isn’t the death knell of experimentation platforms (which is great for me as someone who works for an experimentation platform). Rather, it’s their evolution from rigid applications to fluid infrastructure. Sometimes you want a conversation, but other times it’s a dashboard or precise dropdowns with every option visible. The breakthrough is accessing your experimentation platform in whatever mode fits the moment.

What Changes When Platforms Become Fluid

What is it about experimentation via chat that’s so compelling? It feels natural. Julie Zhuo, former VP of Design at Facebook, explains: it combines two interactions every human already knows—speaking naturally (since age two) and texting (25 billion messages sent daily). No learning curve or docs to read. Just describe what you want.

This matters more than it seems. Every dropdown menu, config screen, nested navigation is a micro-barrier between thought and action. Conversational interfaces remove that friction entirely.

This opens up the possibility of experimentation in media res. Customer interview reveals a pain point? "Create an experiment testing whether removing this friction improves activation." Done, before the meeting ends.

When your platform is ambient—available through conversation, IDE, Slack, wherever—the gap between conception and execution becomes negligible.

Reality Check

And yet. AI is still a terrible experimentation partner:

- It's too agreeable. It'll encourage any idea, with the goal of pleasing you, not making your experimentation program better. AI optimizes for your satisfaction, not your success rate.

- Precision is optional. During a breakout session at Experimentation Island 2025 on experimentation and AI, there was a consensus: AI has many uses in experimentation, but analysis isn't one of them. It often calculates based on vibes, and shows its work post-hoc, which it generates purely to please you (see point 1).

- Complex operations overwhelm it. We tried adding full experiment creation to the GrowthBook MCP Server. It failed. Too many inputs (randomization unit, metrics, variants, flags, environments) in specific sequences. The AI would skip steps or force you to type every parameter, which defeats the purpose.

But like the first iPhone shipping without features we now deem indispensable (copy paste, app store, video), these are temporary limitations. Prompts can be engineered, analyses can be improved, and MCP has been evolving quickly. (They recently introduced “elicitations” for handling complex multi-step inputs like those involved in experiment creation.)

The Platform Paradox

This isn’t just about making experimentation easier (though it does). It’s about changing when and how experimentation happens.

Right now, experimentation is something you do at your desk, in your platform, during "experiment planning time." Tomorrow, it'll be woven into every moment where product decisions happen. Code reviews, customer calls, shower thoughts—wherever ideas emerge, your experimentation platform will be there, in whatever form you need.

Ironically, as experimentation platforms become more fluid, they also become more essential.

When you can create tests from anywhere, you need a rock-solid infrastructure ensuring those tests are configured correctly and run flawlessly. When anyone can launch an experiment through chat, you need sophisticated governance and guardrails. The possibility of such fluid interactions means that the platform actually needs to do more. It’s the paradox of invisible infrastructure—the more seamless it is to use, the more sophisticated it must be underneath.

The Real Shift

The future isn't conversational AI replacing experimentation platforms. It's experimentation platforms becoming fluid enough to meet us wherever we are—through conversation when we're exploring, through visualizations when we're analyzing, through precise controls when we're configuring.

We get a preview of this future with MCP. Yes, it’s imperfect, occasionally frustrating, and limited in some crucial ways, but it’s also genuinely magical when it works. See what I mean by trying out our MCP Server with any of your favorite AI tools. Create flags with a single sentence or check experiments while having a sandwich. Ask the crazy questions you've been holding back. Feel better about yourself after hearing some of AI's god-awful test ideas.

When we build our platform, we obsess over features, workflows, and user journeys. We operate on the (admittedly reasonable) supposition that users need to come to the platform to experimentation. My sandwich moment showed me a different foundation, one where the platform comes to you, so experimentation happens without the weight of “doing experimentation.”

Install GrowthBook MCP Server and experience it yourself.