Controlled experiments, or A/B tests, are the gold standard for determining the impact of new product releases. Top tech companies know this, running countless tests to squeeze out the truth of user behavior. But here’s the rub: many experimentation programs don’t reach that level of maturity. Many fail to make their systems repeatable, scalable, and above all, impactful.

While developing the GrowthBook platform, we spoke to hundreds of experimentation teams and experts. Here are some of the eight most common ways we’ve seen experimentation programs falter, and some ways to avoid them.

Low Experiment Frequency

The humbling truth about A/B testing? Your chances of winning are usually under 30% - and the odds of large wins are even lower. Perversely, as you optimize your product, these winning percentages decrease. If you’re only testing a few tests a quarter, the chances of a large winning experiment in a year are not good. As a result, companies may lose faith in experimentation due to a lack of significant wins.

Successful companies embrace the low odds by upping their testing frequency. The secret is making each experiment as low-cost and low-effort as possible. Lowering the effort means that you can try many more hypotheses without over-investing in ideas that didn't work. Try to identify the smallest change that will signal whether the idea will affect the metrics you care about. Automate where you can, and use tools like GrowthBook (shameless plug!) to streamline the process and scale the number of tests you can run. Additionally, build trust in the value of frequent testing by demonstrating how even small insights compound over time into meaningful change.

Biases and Myopia

Assumptions about product functionality and user behavior often go unchallenged within organizations. These biases, whether personal or communal, can severely limit the scope of experimentation. At my last company, we had a paywall that was assumed to be optimized because it had been tested years ago. It was never revisited until a bug from an unrelated A/B test led to an unexpected spike in revenue. This surprising result prompted a complete reassessment of the paywall, ultimately leading to one of our most impactful experiments.

This scenario illustrates a common pitfall: Organizations often believe they know what users want and are resistant to testing what they assume is correct—a phenomenon known as the Semmelweis Reflex, in which new knowledge is rejected because it contradicts entrenched norms.

A big part of running a successful experimentation program is removing bias about what ideas will work and which will not. Without considering all ideas, the potential success is limited. This myopia can also happen when growth teams are not open to new ideas, or cannot sustain their rate of fresh ideas. In these cases, they can start testing the same kinds of ideas over and over. Without fresh ideas, it can be extremely hard to achieve significant results, and without significant results, it can be hard to justify continuing to experiment.

The key to resolving these issues is to recognize that you or your team may have blind spots. Kick any assumptions to the curb. Test everything—even the things you think are “perfect.” Talk to users, customer support, and even browse competitors for fresh ideas. Remember: success rates are low because we’re not as smart as we think we are.

High Cost

Enterprise A/B testing platforms can be expensive. Most commercial tools charge based on tracked users or events, meaning the more you test, the more you pay. Since success rates are typically low, frequent testing is critical for meaningful results. However, without clear wins, it becomes difficult to justify the ROI of an expensive platform, and experimentation programs can end up on the chopping block.

The goal should be to drive the cost per experiment as close to zero as possible. One way to save money is to build your own platform. While this reduces the long-term cost of each experiment, the upfront investment is steep. Building a system in-house typically requires teams of 5 to 20 people and can take anywhere from 2 to 4 years. This makes sense for large enterprises, but for smaller companies, it's hard to justify the time and resources.

An alternative is to use an open-source platform that integrates with your existing data warehouse. GrowthBook does exactly that. It delivers enterprise-level testing capabilities without requiring an entire infrastructure to be built from scratch. By lowering costs, you can sustain frequent testing and build trust with leadership by showing how your experimentation delivers valuable insights without breaking the bank.

Effort

Nothing kills the momentum of an experimentation program like a labor-intensive setup. I’ve seen teams where it took weeks just to implement one test. And after the test finally ran, they had to manually analyze the results and create a report—a painstaking, repetitive process that limited them to just a few tests per month. Even worse, this created significant opportunity costs for the employees involved, who could have been working on more impactful projects.

Successful programs minimize the effort required to run A/B tests by streamlining and automating as much as possible. Setting up, running, and analyzing experiments should be as simple as possible. Experiment reporting must be self-service, and creating a test should require minimal setup - ideally just a few steps beyond writing a small amount of code. The easier it is to get a test off the ground, the more agile and efficient the experimentation program becomes.

This is where good communication plays a pivotal role. Clear, consistent communication between product, engineering, and data teams is essential for minimizing effort. Everyone involved needs to know what tools and processes are available to them. When teams collaborate effectively, they can anticipate potential roadblocks, avoid duplicated effort, and move faster. Without strong communication, you risk bottlenecks, misaligned priorities, and confusion over responsibilities—all of which slow down the testing process. In short, the easier you make the process—and the better your teams communicate—the more agile your experimentation program becomes.

Bad Statistics

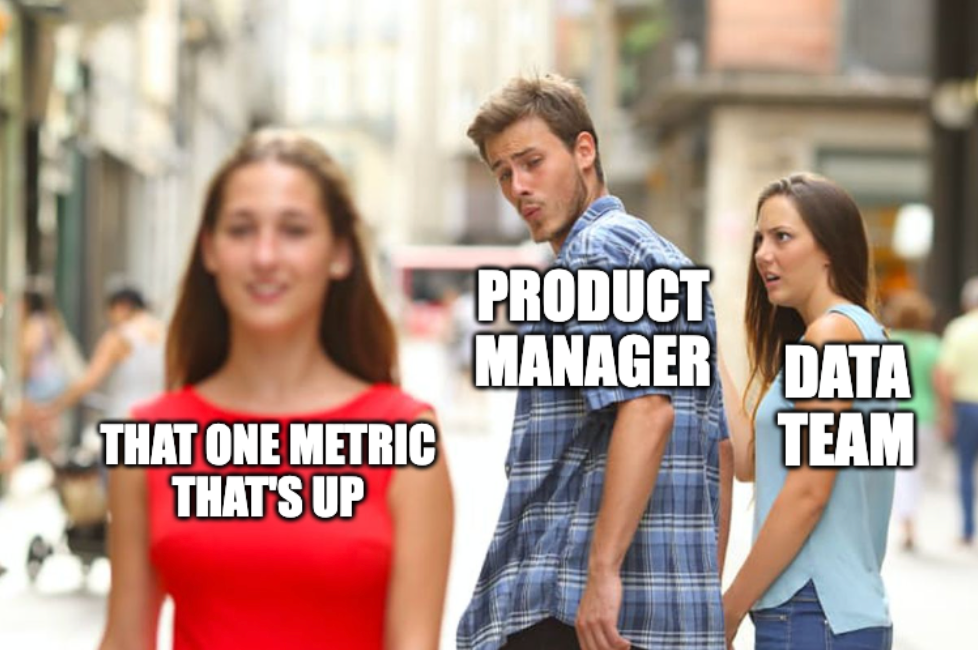

There are about a million ways to screw up interpreting data that can sink an experimentation program. The most common ones are: peeking (deciding on an experiment before it's completed), multiple-comparison problems (adding so many metrics or slicing the data until it shows what you want to see), and just cherry-picking data (ignoring bad results). I’ve seen experiments where every metric is down, save one that is partially up, and be called a winner because the product manager wanted it to win and focused on that one metric. Experimentation results used inappropriately can be used to confirm biases, instead of reflecting reality.

The fix? Train your team to interpret statistics correctly. Your data team should be the centers of excellence, ensuring experiments are designed well and results are objective - not just confirming someone's bias. Teach your teams about the common problems with experimentation and some effects like Semmelweis, Goodhart’s Law, and Twyman's Law.

One of the best ways to build trust in your experimentation program is to standardize how you communicate results. Tools like GrowthBook, offer templated readouts that give everyone a clear, consistent understanding of what really happened in the a/b test. These templates, set up by experts, embed best practices to ensure that business stakeholders can follow along, keeping everyone on the same page.

With consistent, templated results, your team gets reliable insights that reflect reality, even if the truth stings a little. This clarity fosters a culture where data—not gut feelings—drives decisions.

Cognitive Dissonance

Design teams often conduct user research to uncover what their audience wants, typically through mock-ups and small-scale user testing. They watch users interact with the product and collect feedback. Everything looks great on paper, and the design seems airtight. But then they run an A/B test with thousands of real users, and—surprise!—the whole thing flops. Cue the head-scratching and murmurs about whether A/B testing is even worth it.

This situation often triggers a clash of egos between design and product teams. The designers swear by their user research, while product teams trust the cold, hard data from the A/B test. The trick here is to remove the "us vs. them" mentality and remember that both teams have the same goal: building the best possible product.

Treat A/B testing as a natural extension of the design process. It’s not about proving one team right or wrong—it's about refining the product based on real-world data. However, product teams must also be careful not to use data as a shield to justify dark patterns that degrade user trust, proving that sometimes the designers' intuition is correct.

By collaborating closely, design and product teams can run A/B tests to validate their ideas with a much larger audience, gathering more data to iterate and ultimately improve their designs. Testing doesn’t replace design; it enhances it by providing insights that make good designs even better.

Lack of Trust

The last two issues with experimentation programs highlight the importance of trust in your data and deserve their own section. If the team misuses or makes incorrect conclusions from an experiment, like announcing some spurious results, you start eroding trust in the program. Similarly, when you have a counterintuitive result, you will need a high degree of trust in the data and statistics to overcome internal bias. When trust is low, teams may revert to the norm of not experimenting.

The solution, obviously, is to keep trust in your program high. Make sure that your team runs A/A test to verify that the assignment and statistics are working as expected. Make sure you monitor the health of your experiment and data quality as your experiments are being run. If a result is challenged, be open to replicating the experiment to verify the results. After a time, your team can learn to have the right amount of trust in the results.

Lack of Leadership Buy-In

Leaders love to say they’re “data-driven,” but when their pet projects start getting tested and don’t pan out, they’re suddenly a lot less interested in data. When you start measuring, you get a lot of failures and projects that don’t impact metrics. I’ve seen it time and time again: tests come back with no significant results, and leadership starts questioning why we’re testing at all.

It’s especially tough when they expect big, immediate wins, and the incremental nature of testing leaves them cold. When only ⅓ of all ideas win, this is a huge barrier, as oftentimes, leadership is focused on timely delivery.

Educate leadership on the long game of experimentation. Data-driven decision-making doesn’t mean instant success; it means learning from failures and iterating toward better solutions. Admitting you don’t always know what’s best can be tough—especially for highly paid leaders. It can bruise egos when a beloved idea flops in a test, but that’s part of the process. There's plenty written about the HiPPO problem (Highest Paid Person’s Opinion), where decisions are driven by rank rather than data.

The trick is to build trust in the experimentation process by showing that most ideas fail—and that’s okay—because you still benefit from the results. To demonstrate your program's value, focus on long-term impact and cumulative wins. Even small, incremental improvements lead to major gains over time. Insights from each test, even the "failures," inform smarter decisions, improving the organization's ability to predict what works. Highlight that if you had not tested an idea, it might have had a negative impact, so it should be seen as a win or “save.” As you learn what users like and don’t like, future development will include these patterns, making future projects more successful. This can be difficult to communicate, but it’s essential.

Poor Process

Prioritization is hard. Most experimentation programs try to rank projects using systems like PIE or ICE, which assign numerical scores to factors like potential impact. The impact of a project is notoriously hard to predict, and it doesn’t become objective just because one puts a number on it. However, these systems often oversimplify the complexity of experimentation, making it harder to get tests running quickly. the effect of a bad process, as well-intended as they are, can reduce the experiment velocity and the chance of a successful program.

One solution to this is to give autonomy to teams closest to the product. Let them choose what experiments to run next, with loose prioritization from above. The more tests you run, the more likely you are to hit something big, so focus on velocity.

Conclusion

Experimentation can be a game-changer if you avoid these common pitfalls. Hopefully, this list will give you some points to consider that can improve your chances of having a successful experimentation program. If any of these failure modes sound familiar, try experimenting with some of the solutions mentioned for a month or two—you might be surprised by the results.