Holdouts answer a deceptively simple question: “What did all of this shipping actually do?” In GrowthBook, a holdout keeps a small, durable control group away from new features, experiments, and bandits, then compares them to everyone else over time. That comparison is your long-run, cumulative impact—no guess work, no complicated de-biasing algorithms.

You can read more about holdouts in this blog post, Holdouts in GrowthBook: The Gold Standard for Measuring Cumulative Impact and in our documentation. But in this post, I’m going to talk about some of the nitty-gritty choices we made and why we made them.

1) We measure everything that happened, not just shipped winners

There are two different approaches out there to measuring impact with holdouts:

- “Measure everything” approach (used in GrowthBook). The holdout group stays off all new functionality; everyone else proceeds as normal—experimenting, shipping, backtracking, and iterating. We then compare a small, like-for-like measurement subset of the general population to the holdout. That design deliberately measures the full experience of what happened over the quarter, not just the curated list of winners. It’s a more faithful assessment of the world your users actually saw.

- “Clean-room” approach. The holdout group still stays off all new functionality. However, you also withhold a holdout test group that only sees shipped features. This slice is used to compare against your holdout; meanwhile, the remaining traffic is where day-to-day experiments run.

Here’s another way to think about it. Imagine your traffic is split into 3 groups with a 5% holdout:

- Holdout (5%): The same across both groups. Never sees any new feature

- Measurement (5%): The key difference is here. In the “clean room” approach, they are held out until a feature is shipped and then get the winning variation. In the “measure everything” approach, they are identical to the General group, and are used to experiment and ship

- General (90%): The same across both groups. Used to experiment and ship

How do they compare?

The “clean-room” approach provides you with the most accurate assessment of what you shipped. You get a sample that only sees the shipped features and does not have a history of seeing features you decided not to ship. This can really help you know if “what you shipped worked.”

However, it has 3 major downsides:

- It leaves you blind to what actually happened to the vast majority of users along the way (failed experiments, feature false starts, etc.). If you want to know if your overall program is headed in the right direction, you have to include the costs of running experiments, exposing users to losing variations, and more. While “measure everything” may be a worse estimate of simply the cumulative impact of winners, it more accurately represents the impact your team had. What’s more, not knowing what is going on with 90+% of your entire user base is quite a cost to pay.

- Furthermore, it may actually be a worse estimate of the impact going forward. If seeing failed experiments in the past better represents how future failed experiments may interact with your shipped features, then you actually want your holdout estimate to include these past failed experiments.

- You end up with lower power for your regular tests. By splitting another 5% off of the general population, all of your regular tests will have 5% less traffic to ship. This could slow down your overall experimentation program and lead to worse decisions.

For these reasons, at GrowthBook we opted for the approach where you “measure everything.”

2) How feature evaluation works: prerequisites

Under the hood, Holdouts rely on prerequisites. Before any feature rule or experiment is evaluated, GrowthBook checks the holdout prerequisite and diverts holdout users to default values. This acts just like a regular rule in your Feature evaluation flow to make it easy to understand what's happening.

Everyone else flows through your rules as usual. Because that evaluation triggers on every included feature or experiment, holdout exposure can occur at different moments in a user’s journey.

That has two important implications for analysis:

- Prefer metrics with lookback windows. Since users can encounter the holdout at varying times, fixed conversion windows anchored to a single “first exposure” are often ill-posed for long-running, multi-feature measurement. GrowthBook enforces this: you can’t add conversion-window metrics to a holdout; instead use long-range metrics without windows or with lookback windows.

- Use the built-in Analysis Period when you’re ready to read the holdout: freeze new additions, keep splitting traffic, and let GrowthBook apply dynamic lookback windows per experiment/metric so you measure exactly the period you care about.

3) Compliance by default: project-level enforcement

Holdouts are scoped to Projects—a core GrowthBook organizing unit for features, metrics, experiments, SDKs, and permissions. Assign a holdout to a project and, from that point on, new features, experiments, and bandits created in that project inherit the holdout by default (there’s an escape hatch if you truly need it). This keeps your baseline clean without relying on every engineer, product manager, or data analyst remembering to use the holdout.

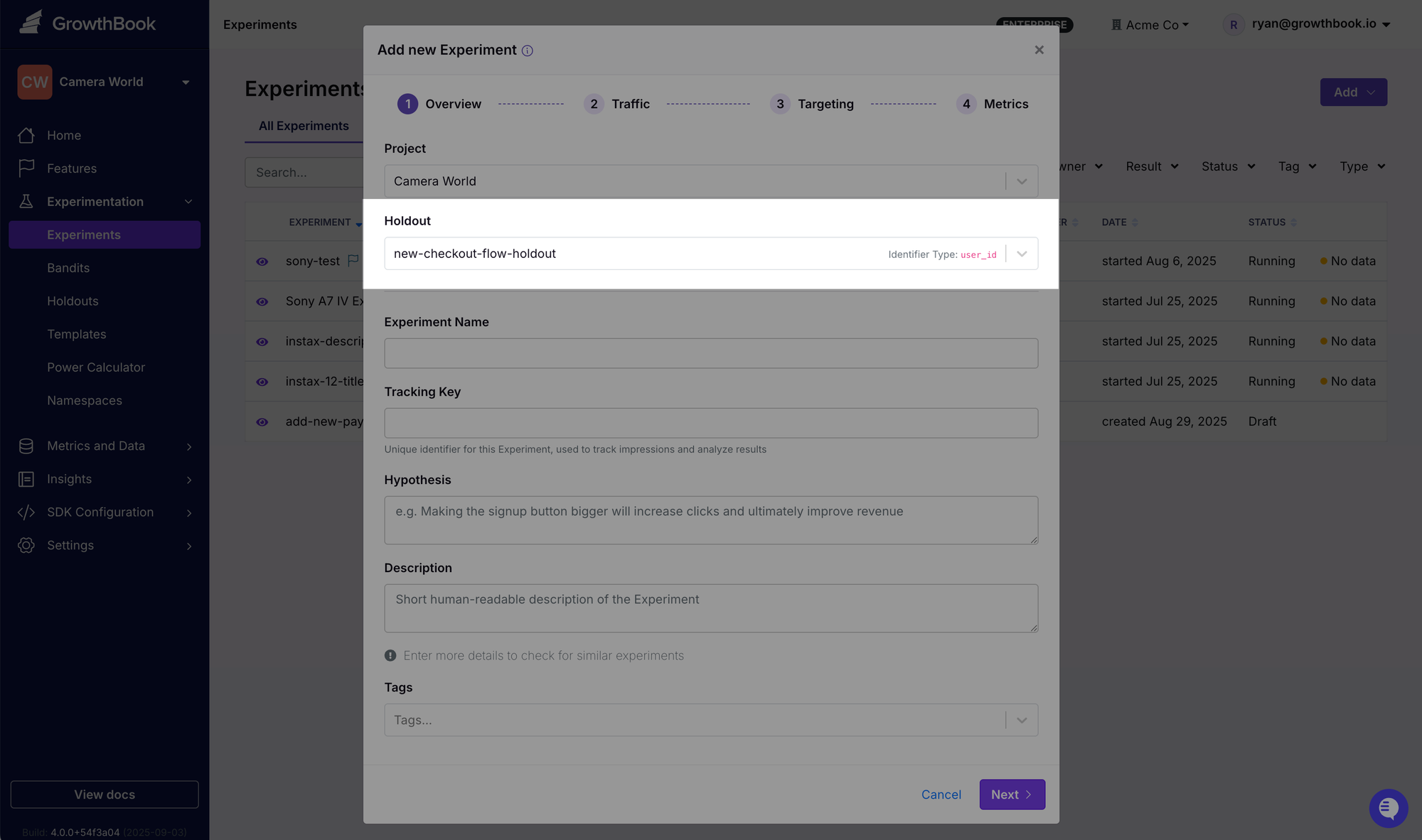

Under the hood, each time your team creates an experiment or a feature in a Project, we check if that Project has any associated holdouts. If there is one, we pre-select it, and allow you to opt out with a warning. If there is more than one holdout, we select the first one by default, but experimenters can switch their selected holdout. We recommend you avoid this situation. If there are any holdouts that have no project scoping, they are available to all projects, and we also recommend avoiding this unless you are running a global holdout.

This adds one more reason to use Projects:

- Right-sized access. Projects already let you define who can see and change what, including “no access” when needed. Holdouts ride along with those boundaries.

- Cleaner authoring flows. Creators see a Holdout field during setup; if multiple holdouts exist (not recommended), they can select the correct one. Otherwise it’s on by default—compliance without cognitive load.

- Comparable program reads. Teams running their own project-scoped holdouts can produce apples-to-apples quarterly reads of cumulative impact across surfaces.

TL;DR

- Measure reality, not a curated subset. Our holdouts capture everything that happened during the holdout period.

- Prerequisites power correctness. Every evaluation respects the holdout; pair that with lookbacks or the analysis window for clean metric reads.

- Compliance is built-in. Project-level enforcement makes holdouts the default, not an afterthought.

Get started by reading our docs or by signing up.