The 30-Second Summary

A/B testing (also called split testing) is a method of comparing two+ versions of a webpage, app feature, or marketing element to determine which performs better. You show version A (the control) to one group and version B (the variant) to another, then measure which drives better results for your business goals.

Why it matters: A/B testing removes guesswork from decision-making, turning "we think" into "we know" based on actual user behavior and statistical evidence.

Key takeaway: Done right, A/B testing can increase conversions without spending more on traffic, validate ideas before full implementation, and build a culture of continuous improvement.

What Exactly is A/B Testing?

Imagine you're at a coffee shop debating whether to put your tip jar by the register or at the pickup counter. Instead of guessing, you try both locations on alternating days and measure which generates more tips. That's A/B testing in the physical world.

In digital environments, A/B testing works by:

- Randomly splitting your audience into two (or more) groups

- Showing different versions of the same element to each group simultaneously

- Measuring the impact on predetermined metrics

- Declaring a winner based on statistical significance

- Implementing the better version for all users

The Critical Difference: Testing vs. Guessing

Without A/B testing, decisions rely on:

- HiPPO (Highest Paid Person's Opinion)

- Best practices that may not apply to your audience

- Assumptions about user behavior

- Competitor copying without context

With A/B testing, decisions are based on:

- Actual user behavior from your specific audience

- Statistically validated results

- Measurable business impact

- Continuous learning about what works

Why A/B Testing is Essential in 2025

1. Maximize Existing Traffic Value

Traffic acquisition costs (TAC) have increased 222% since 2019. And, with the rise of generative AI, the usefulness of long-standing acquisition strategies are more uncertain than ever. A/B testing helps you extract more value from visitors you already have—often delivering higher ROI than acquiring new traffic.

2. Reduce Risk of Major Changes

Instead of redesigning your entire site and hoping for the best, test changes incrementally. If something doesn't work, you've limited the damage to a small test group.

3. Resolve Internal Debates with Data

Stop endless meetings debating what "might" work. Run a test, get data, make decisions. As one PM put it: "A/B testing turned our three-hour design debates into 30-minute data reviews."

4. Discover Surprising Insights

Microsoft found that changing their Bing homepage background from white to a slightly different shade generated $10 million in additional revenue. While you shouldn't expect $10 million revenue gains from A/B test (that's an outlier), these wins don't even become a possibility until you start testing for them.

5. Build Competitive Advantage

While competitors guess, you know. Netflix attributes much of its success to running thousands of tests annually, optimizing everything from thumbnails to recommendation algorithms.

What Can You Test? (Almost Everything)

Website Elements

- Headlines and copy: Different value propositions, tones, lengths

- Call-to-action buttons: Color, size, text, placement

- Images and videos: Product photos, hero images, background videos

- Forms: Number of fields, field types, progressive disclosure

- Navigation: Menu structure, sticky headers, breadcrumbs

- Layout: Single vs. multi-column, card vs. list view

- Pricing: Display format, anchoring, bundling options

- Social proof: Testimonials, reviews, trust badges placement

Beyond Websites

- Email campaigns: Subject lines, send times, content length

- Mobile apps: Onboarding flows, feature placement, notification timing

- Ads: Creative, copy, targeting parameters

- Product features: Functionality, user interface, defaults

- Internal tools: Dashboard layouts, workflow steps

- Algorithms: Recommendations, feature items

- AI: Prompts, models

The Science Behind A/B Testing

Statistical Foundations: Two Approaches

Modern A/B testing platforms offer two statistical frameworks, each with distinct advantages:

Bayesian Statistics (Often the Default)

Bayesian methods provide more intuitive results by expressing outcomes as probabilities rather than binary significant/not-significant decisions. Instead of p-values, you get statements like "there's a 95% chance variation B is better than A."

This approach:

- Allows continuous monitoring without invalidating results

- Incorporates prior knowledge to avoid over-interpreting small samples

- Provides probability distributions showing the range of likely outcomes

- Calculates "risk" or expected loss if you choose the wrong variation

Frequentist Statistics (Traditional Approach)

Frequentist methods use hypothesis testing with p-values and confidence intervals. This classical approach:

- Requires predetermined sample sizes

- Uses statistical significance thresholds (typically 95%)

- Provides clear yes/no decisions based on p-values

- Is familiar to those with traditional statistics backgrounds

Key concepts both approaches share:

1. Null Hypothesis (H₀): The assumption that there's no difference between versions A and B

2. Alternative Hypothesis (H₁): Your prediction that version B will perform differently

3. Statistical Significance/Confidence: The certainty that results aren't due to chance

4. Statistical Power: The probability of detecting a real difference when it exists (typically aim for 80%+)

Many modern platforms like GrowthBook default to Bayesian but offer both engines, letting teams choose based on their preferences and expertise. Both approaches can utilize advanced techniques like CUPED for variance reduction and sequential testing for early stopping.

Sample Size: The Foundation of Reliable Tests

You need enough data to trust your results. The required sample size depends on:

- Baseline conversion rate: Your current performance

- Minimum detectable effect (MDE): The smallest improvement you care about

- Statistical significance threshold: Usually 95%

- Statistical power: Usually 80%

Example calculation:

- Current conversion rate: 3%

- Want to detect: 20% relative improvement (to 3.6%)

- Required confidence: 95%

- Result: ~14,000 visitors per variation

Tools to help: Most A/B testing platforms include built-in power calculators and sample size estimators. These tools eliminate guesswork by automatically calculating the visitors needed based on your specific metrics and goals.

The Danger of Peeking

Checking results before reaching sample size is like judging a marathon at the 5-mile mark. Early results fluctuate wildly and often reverse completely.

Why peeking misleads:

- Small samples amplify random variation

- Winners and losers often swap positions multiple times

- "Regression to the mean" causes early extremes to normalize

- Each peek increases your false positive rate

The solution: Set your sample size, run the test to completion, then analyze. No exceptions.

Your Step-by-Step A/B Testing Process

Step 1: Research and Identify Opportunities

Start with data, not opinions:

- Analyze your analytics for high-traffic, high-impact pages

- Review heatmaps and session recordings

- Collect customer feedback and support tickets

- Run user surveys about friction points

- Audit your conversion funnel for drop-offs

Prioritize using ICE scoring:

- Impact: How much could this improve key metrics?

- Confidence: How sure are you it will work?

- Ease: How simple is it to implement?

Step 2: Form a Strong Hypothesis

Weak hypothesis: "Let's try a green button"

Strong hypothesis: "By changing our CTA button from gray to green (change), we will increase contrast and draw more attention (reasoning), resulting in a 15% increase in click-through rate (predicted outcome) as measured over 14 days with 95% confidence (measurement criteria)."

Hypothesis framework: "By [specific change], we expect [specific metric] to [increase/decrease] by [amount] because [reasoning based on research]."

Step 3: Design Your Test

Critical rules:

- Test one variable at a time (multiple changes = unclear results)

- Ensure equal, random traffic distribution

- Keep everything else identical between versions

- Consider mobile vs. desktop separately

- Account for different user segments if needed

Quality assurance checklist (guardrails):

- Both versions load at the same speed

- Tracking is properly implemented

- Test works across all browsers

- Mobile experience is preserved

- No flickering or layout shifts

- Forms and CTAs function correctly

Step 4: Calculate Required Sample Size

Never start without knowing your endpoint. Most modern A/B testing platforms include power calculators that do the heavy lifting for you.

Input these parameters:

- Current conversion rate (from your analytics)

- Minimum improvement worth detecting (be realistic)

- Significance level (typically 95%)

- Statistical power (typically 80%)

The platform will calculate exactly how many visitors you need per variation. This removes the guesswork and ensures your test has enough power to detect meaningful differences.

Time consideration: Run tests for at least one full business cycle (usually 1-2 weeks minimum) to account for:

- Weekday vs. weekend behavior

- Beginning vs. end of month patterns

- External factors (news, weather, events)

Step 5: Launch and Monitor (Without Peeking!)

Launch checklist:

- Set up your test in your A/B testing tool

- Configure goal tracking and secondary metrics

- Document test details in your testing log

- Set calendar reminder for test end date

- Resist the urge to check results early

Monitor only for:

- Technical errors or bugs

- Extreme business impact (massive revenue loss)

- Sample ratio mismatch (uneven traffic split)

Step 6: Analyze Results Properly

Beyond the winner/loser binary:

- Check statistical significance (p-value < 0.05)

- Verify sample size was reached

- Look for segment differences (mobile vs. desktop, new vs. returning)

- Analyze secondary metrics (did conversions increase but quality decrease?)

- Consider practical significance (is 0.1% lift worth implementing?)

- Document learnings regardless of outcome

Step 7: Implement and Iterate

If your variation wins:

- Implement for 100% of traffic

- Monitor post-implementation performance

- Test iterations to maximize the improvement

- Apply learnings to similar pages/elements

If your variation loses:

- This is still valuable learning

- Analyze why your hypothesis was wrong

- Test the opposite approach

- Document insights for future tests

If it's inconclusive:

- You may need a larger sample size

- The difference might be too small to matter

- Test a bolder variation

Advanced A/B Testing Strategies

1. Sequential Testing

Instead of testing A vs. B, test A vs. B, then winner vs. C, building improvements incrementally.

2. Bandit Testing

Automatically shift more traffic to winning variations during the test, maximizing conversions while learning.

3. Personalization Layers

Test different experiences for different segments (new vs. returning, mobile vs. desktop, geographic regions).

4. Full-Funnel Testing

Don't just test for initial conversions—measure downstream impact on retention, lifetime value, and referrals.

5. Qualitative + Quantitative

Combine A/B tests with user research to understand not just what works, but why it works.

Common A/B Testing Mistakes (And How to Avoid Them)

Mistake #1: Testing Without Traffic

Problem: Running tests on pages with <1,000 visitors/week

Solution: Focus on highest-traffic pages or make bolder changes that require smaller samples to detect

Mistake #2: Stopping Tests at Significance

Problem: Ending tests as soon as p-value hits 0.05

Solution: Predetermine sample size and duration; stick to it regardless of interim results

Mistake #3: Ignoring Segment Differences

Problem: Overall winner performs worse for valuable segments

Solution: Always analyze results by key segments before implementing

Mistake #4: Testing Tiny Changes

Problem: Button shade variations when the whole page needs work

Solution: Match change boldness to your traffic volume; small sites need bigger swings

Mistake #5: One-Hit Wonders

Problem: Running one test then moving on

Solution: Create a testing culture with regular cadence and iteration

Mistake #6: Significance Shopping

Problem: Testing 20 metrics hoping one shows significance

Solution: Choose primary metric before starting; treat others as secondary insights

Mistake #7: Seasonal Blindness

Problem: Testing during Black Friday, applying results year-round

Solution: Note external factors; retest during normal periods

Mistake #8: Technical Debt

Problem: Winner requires complex maintenance or breaks other features Solution: Consider implementation cost in your analysis

Mistake #9: Learning Amnesia

Problem: Not documenting or sharing test results

Solution: Maintain a testing knowledge base; share learnings broadly

Mistake #10: Ignoring Long-Term Cost

Problem: It is easy to mistake a confused user for a converted user

Solution: Avoid dark patterns in your experiment design that boost immediate conversions but result in higher refund rates or brand damage later

Building a Testing Culture

Moving Beyond Individual Tests

The real value of A/B testing isn't any single win—it's building an organization that makes decisions based on evidence rather than opinions.

Cultural pillars:

- Democratize testing: Enable anyone to propose tests (with proper review)

- Celebrate learning: Failed tests that teach are as valuable as winners

- Share broadly: Make results visible across the organization

- Think in probabilities: Replace "I think" with "Let's test"

- Embrace iteration: Every result leads to new questions

Testing Program Maturity Model

Level 1: Sporadic

- Occasional tests when someone remembers

- No formal process

- Results often ignored

Level 2: Systematic

- Regular testing cadence

- Basic documentation

- Some stakeholder buy-in

Level 3: Strategic

- Testing roadmap aligned with business goals

- Cross-functional involvement

- Knowledge sharing practices

Level 4: Embedded

- Testing considered for every change

- Sophisticated segmentation and analysis

- Company-wide testing culture

Level 5: Optimized

- Predictive models guide testing

- Automated test generation

- Testing drives innovation

The Future of A/B Testing

AI-Powered Testing

Machine learning increasingly suggests what to test, predicts results, and automatically generates variations.

Real-Time Personalization

Move beyond testing to delivering the optimal experience for each individual user.

Causal Inference

Advanced statistical methods better isolate true cause-and-effect relationships.

Cross-Channel Orchestration

Test experiences across web, mobile, email, and offline touchpoints simultaneously.

Privacy-First Methods

New approaches maintain testing capability while respecting user privacy and regulatory requirements.

Your Next Steps

Start Today (Even Small)

- Pick one element on your highest-traffic page

- Form a hypothesis about how to improve it

- Run a simple test for two weeks

- Analyze results objectively

- Share learnings with your team

- Test again based on what you learned

Quick Wins to Try First

- Headline on your homepage

- CTA button color and text

- Form field reduction

- Social proof placement

- Pricing page layout

- Email subject lines

Resources for Continued Learning

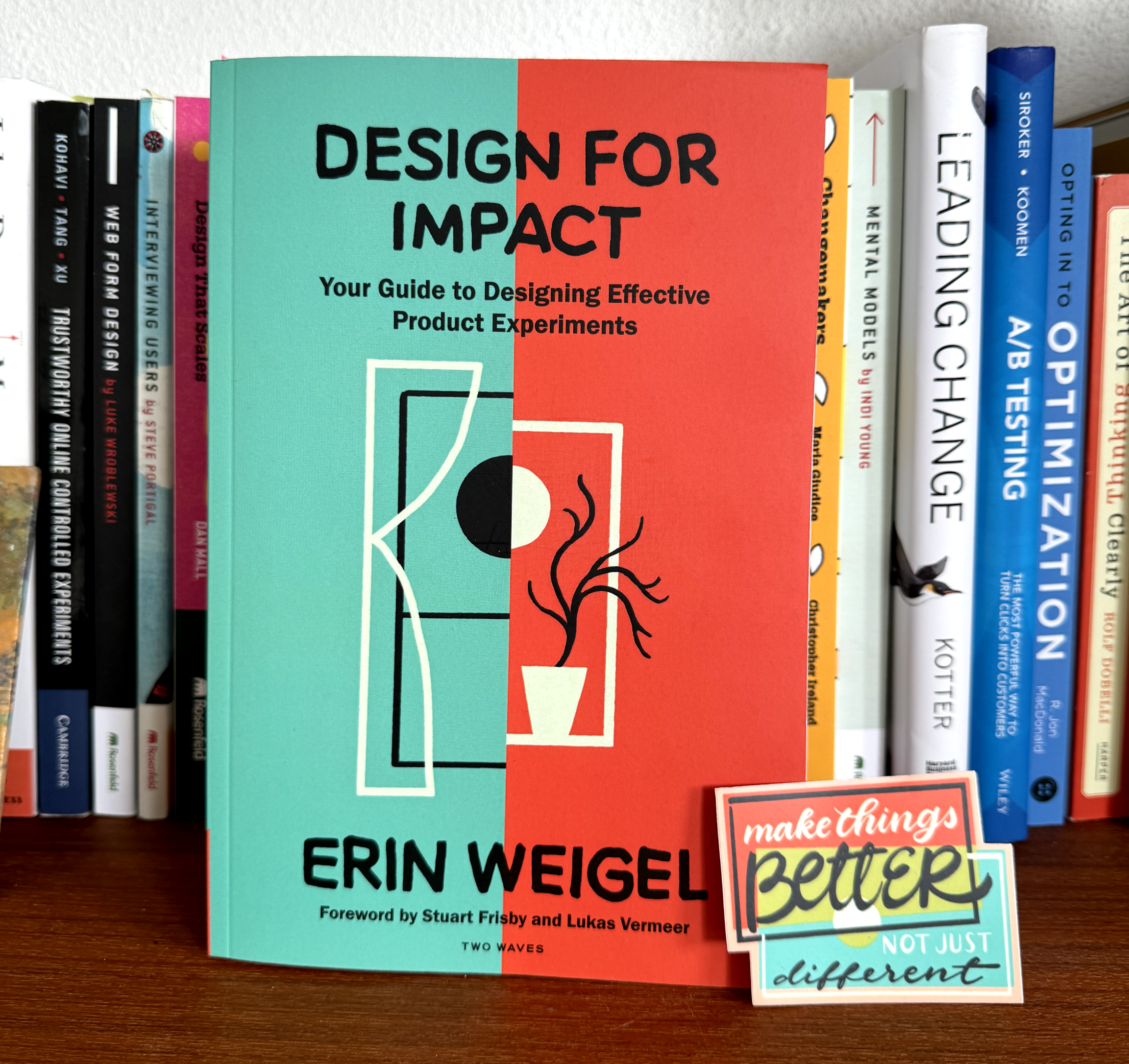

Essential books:

- "Trustworthy Online Controlled Experiments" by Kohavi, Tang, and Xu

- "A/B Testing: The Most Powerful Way to Turn Clicks Into Customers" by Dan Siroker

Communities:

Stay updated:

- Follow industry leaders on LinkedIn

- Subscribe to testing tool blogs

- Join local CRO meetups

Conclusion: From Guessing to Knowing

A/B testing transforms how organizations make decisions. Instead of lengthy debates, political maneuvering, and costly mistakes, you get clarity through data.

But remember: A/B testing is a tool, not a religion. Some decisions require vision, creativity, and bold leaps that testing can't validate. The art lies in knowing when to test and when to trust your instincts.

Start small. Test consistently. Learn continuously. Let data guide you while creativity drives you.

The companies that win in 2025 won't be those with the best guesses—they'll be those with the best evidence.